ChatGPT Risks for Insurance brokers

Scott Bennett

10 July 2025

ChatGPT Risks for Insurance brokers

In 2025, ChatGPT is working its way into brokers day-to-day processes. From drafting client emails to policy comparisons, brokers are finding new ways to save time and deliver value. Generative AI like ChatGPT opens up exciting new possibilities, but it’s important to recognise and address the risks.

In September 2024, ASIC released a report warning that many companies in the financial and insurance industry were using AI without proper internal governance arrangements, exposing them to serious compliance risks.

It’s a good time for brokerages to consider their AI use. This article will explain three data privacy risks that Australian insurance brokers using ChatGPT should know about, and offer solutions to consider.

⚠️ Risk #1 - ChatGPT training on client data

Meet John, an Australian insurance broker. He sometimes uses ChatGPT to compare various Schedules and policy wordings for his clients, especially when he is busy. To do so, he uploads these documents, containing clients personal information, into ChatGPT.

Although ChatGPT can be helpful and improve John’s efficiency, unless he has changed his settings, ChatGPT may train their AI model using that client data.

This poses a risk of non-compliance with the Australian Privacy Principles and the Privacy Act 1988. The consequence of non-compliance could be fines, regulator attention, or legal action.

✅ How can you prevent ChatGPT from training on your client data?

As of June 2025, there is an option to stop ChatGPT from training its AI models on your data.

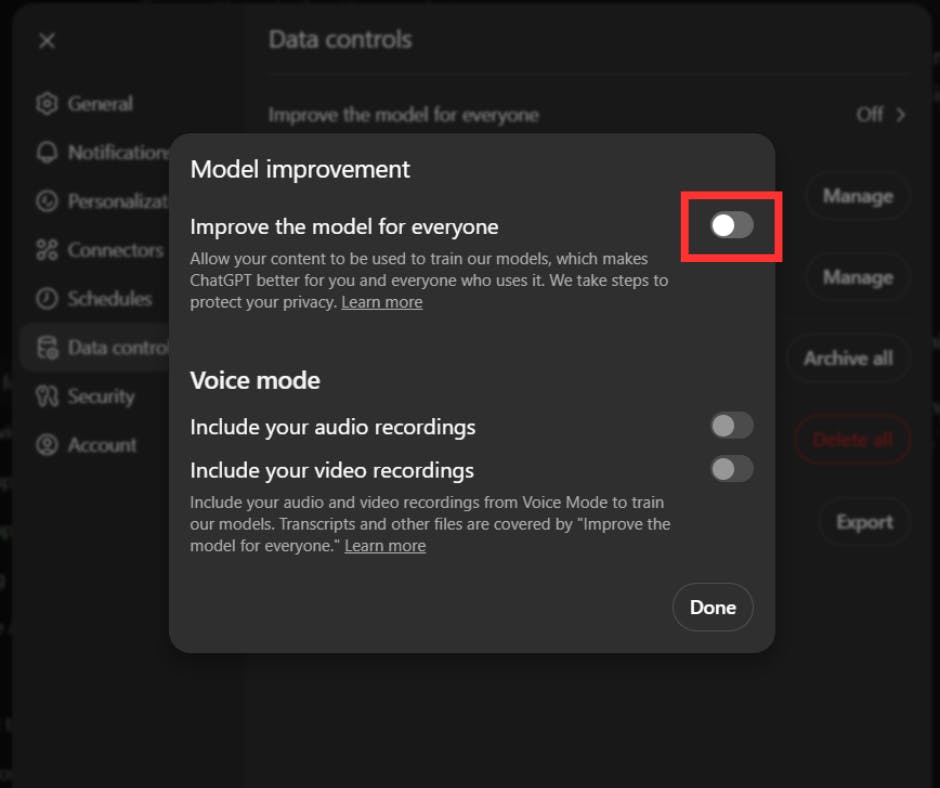

- First, navigate to the 'Settings' tab.

- Next, go to ‘Data Controls’ .

- Then click on ‘Improve the model for everyone’ and toggle off the setting (see Figure below)

Once that’s done, ChatGPT will no longer train its AI models on your or your clients’ data. Success! 🎉This is one practical step brokers can take to reduce compliance risks related to OpenAI’s model training on client data.

For further information, please read OpenAI’s Privacy Policy and Terms of Service available on their website.

⚠️ Risk #2 - Overseas disclosure of client’s personal information

John sometimes uses ChatGPT to help draft client correspondence by entering clients’ personal information and uploading related files. ChatGPT then efficiently generates the correspondence based on John’s instructions.

Although this is helpful, it creates compliance risks under Australian privacy law.

This is because ChatGPT’s servers are located overseas, primarily in the United States. This means John’s input is transmitted internationally for processing before the output is returned to his screen. Although this is done in milliseconds, there are compliance risks.

Australia has strict laws governing cross-border disclosure of personal and sensitive information, particularly where the destination country lacks privacy protections equivalent to Australia’s. In such cases, there’s a risk the data may be handled in ways that wouldn’t comply with Australian standards.

This kind of overseas disclosure could raise issues, particularly under Australian Privacy Principle 8 and related provisions of the Privacy Act 1988 (Cth).

Possible Solutions

✅ Update brokerage's privacy policy

We’ve noticed that many brokerages’ privacy policies include details about the countries to which personal or sensitive information may be disclosed during the broking process. Updating the brokerage’s privacy policy to reflect overseas disclosures arising from ChatGPT use could help address client disclosure obligations and improve transparency.

✅ De-identify clients’ personal and sensitive information

De-identifying clients’ personal and sensitive information allows brokers to continue using ChatGPT while minimising risks related to overseas data disclosure.

This involves removing, deleting, or redacting specific information before uploading it to ChatGPT.

Under the Privacy Act 1988 (Cth), personal and sensitive information about a client could include:

- Name

- Address, email address, phone number

- Date of birth

- Any government identifiers (such as TFNs)

- Financial details

- Policy numbers

- Health information

Disclaimer: This list is for illustrative purposes and is non-exhaustive. Accuracy should be independently verified. This does not constitute legal advice, it is meant for informational purposes only.

Personal information and sensitive information are both defined under the Privacy Act 1988. For the most accurate guidance on what specific information should be removed, seeking legal advice may be appropriate.

By removing personal and sensitive information from schedules, email drafts, and other client materials before ChatGPT use, brokers can reduce the risk of disclosing this client data overseas.

✅ Use AI-powered InsurTech that keeps all the data within Australia

Consider InsurTech solutions that store and process all data domestically. There are several options available, Brokernote is one of them.

We’re an Australian company based in Melbourne, familiar with Australian privacy laws, and we ensure all your data remains securely within Australia.

⚠️Risk #3 - No contractual safeguards

John regularly uses ChatGPT to help with client queries and document preparation, entering clients personal and sensitive information into the platform. While ChatGPT provides fast and helpful responses, John, who uses an individual ChatGPT account, should be aware that, aside from standard terms of service, there are no specific contractual safeguards between him and OpenAI regarding the protection of his clients’ data.

The terms between John and OpenAI do not provide the same level of assurance and specific protections as a contract with a local InsurTech provider may provide. Contractual safeguards may offer clarity on the following critical issues:

- Data privacy and confidentiality

- Protection against data leakage or hacking

- Parties liability

- Whether data may be sold, traded, or otherwise misused

Without these contractual safeguards, the responsibility for any privacy breaches or misuse of client data falls entirely on the broker. There is no recourse or negotiated protection, unlike when contracting with an Australian InsurTech company that is familiar with local privacy laws and can offer tailored agreements to protect both the broker and their clients.

Possible Solutions

✅ De-identify clients’ personal and sensitive information

See above for more detail.

If OpenAI does not receive personal or sensitive client information, this may mitigate some privacy and compliance risks associated with a lack of contractual safeguards.

✅Consider ChatGPT’s Team plan

Upgrading to a ChatGPT’s Team plan offers additional data privacy terms, support, and encryption of inputted data. Plans such as these are well suited for organisations with higher compliance requirements such as brokerages. A Team's plan terms is generally entered by an organisation, and should be reviewed to consider whether it meets the data privacy needs of the brokerage.

✅ Contractual relationships with InsurTech solutions

Consider Australian InsurTech providers that offer robust, enforceable contracts specifically addressing data privacy. At Brokernote, our expertise in local Australian privacy regulatory obligations and compliance-focused product design ensure that brokers can meet their data privacy obligations with confidence.

Conclusion

Staying on top of data privacy is more important than ever for Australian insurance brokers as AI becomes part of daily operations. With regulators like ASIC closely monitoring AI use in the industry, brokers must be proactive in managing compliance risks, especially when using tools like ChatGPT that may train on client data, transfer information overseas, or lack contractual safeguards.

At Brokernote, we understand the unique privacy challenges faced by Australian brokers. We’re well-versed in local Australian privacy laws and are dedicated to helping brokers meet their compliance obligations with confidence. Transparency is at the core of our approach, and we’re committed to ensuring your client data is managed securely and ethically.

We’re currently developing an AI toolkit for Australian insurance brokers, enabling brokers to leverage the power of AI while keeping your data onshore and client's information safe.

To learn more, visit our website, or contact us via email at scott@brokernote.com.au.

We’re always happy to connect with brokers and insurance professionals, and we’re excited to offer opportunities for beta-testing our latest solutions.

Reach out, and let’s work together to shape the future of AI-powered broking in Australia.

Regards,

Scott

Similar posts

Find more interesting topics below